Propagation of uncertainty

In statistics, propagation of error (or propagation of uncertainty) is the effect of variables' uncertainties (or errors) on the uncertainty of a function based on them. When the variables are the values of experimental measurements they have uncertainties due to measurement limitations (e.g., instrument precision) which propagate to the combination of variables in the function.

The uncertainty is usually defined by the absolute error. Uncertainties can also be defined by the relative error (Δx)/x, which is usually written as a percentage.

Most commonly the error on a quantity, Δx, is given as the standard deviation, σ. Standard deviation is the positive square root of variance, σ2. The value of a quantity and its error are often expressed as x ± Δx. If the statistical probability distribution of the variable is known or can be assumed, it is possible to derive confidence limits to describe the region within which the true value of the variable may be found. For example, the 68% confidence limits for a one dimensional variable belonging to a normal distribution are ± one standard deviation from the value, that is, there is a 68% probability that the true value lies in the region x ± σ. Note that the percentage 68% is approximate as the exact percentage that corresponds to one standard deviation is slightly larger than this.

If the variables are correlated, then covariance must be taken into account.

Contents |

Linear combinations

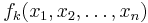

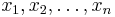

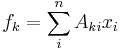

Let  be a set of m functions which are linear combinations of

be a set of m functions which are linear combinations of  variables

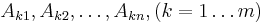

variables  with combination coefficients

with combination coefficients  .

.

or

or

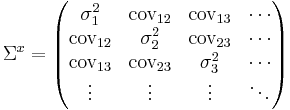

and let the variance-covariance matrix on x be denoted by  .

.

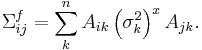

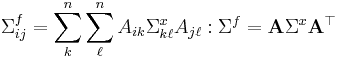

Then, the variance-covariance matrix  , of f is given by

, of f is given by

.

.

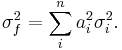

This is the most general expression for the propagation of error from one set of variables onto another. When the errors on x are un-correlated the general expression simplifies to

Note that even though the errors on x may be un-correlated, their errors on f are always correlated.

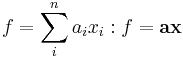

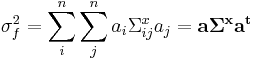

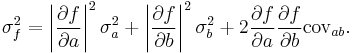

The general expressions for a single function, f, are a little simpler.

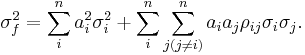

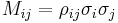

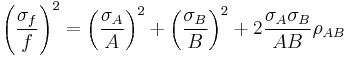

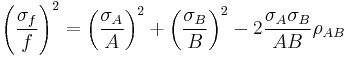

Each covariance term,  can be expressed in terms of the correlation coefficient

can be expressed in terms of the correlation coefficient  by

by  , so that an alternative expression for the variance of f is

, so that an alternative expression for the variance of f is

In the case that the variables x are uncorrelated this simplifies further to

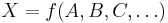

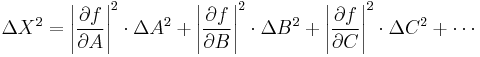

Non-linear combinations

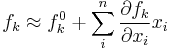

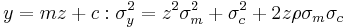

When f is a set of non-linear combination of the variables x, it must usually be linearized by approximation to a first-order Taylor series expansion, though in some cases, exact formulas can be derived that do not depend on the expansion.[1]

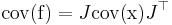

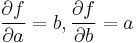

where  denotes the partial derivative of fk with respect to the i-th variable. Or in matrix notation,

denotes the partial derivative of fk with respect to the i-th variable. Or in matrix notation,

where J is the Jacobian matrix. Since f0k is a constant it does not contribute to the error on f. Therefore, the propagation of error follows the linear case, above, but replacing the linear coefficients, Aik and Ajk by the partial derivatives,  and

and  . In matrix notation,

. In matrix notation,

.[1]

.[1]

That is, the Jacobian of the function is used to transform the rows and columns of the covariance of the argument.

Example

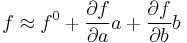

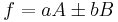

Any non-linear function, f(a,b), of two variables, a and b, can be expanded as

hence:

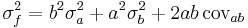

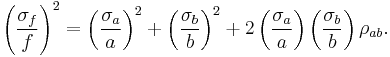

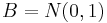

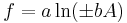

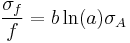

In the particular case that  ,

,  . Then

. Then

or

Caveats and warnings

Error estimates for non-linear functions are biased on account of using a truncated series expansion. The extent of this bias depends on the nature of the function. For example, the bias on the error calculated for log x increases as x increases since the expansion to 1+x is a good approximation only when x is small.

In data-fitting applications it is often possible to assume that measurements errors are uncorrelated. Nevertheless, parameters derived from these measurements, such as least-squares parameters, will be correlated. For example, in linear regression, the errors on slope and intercept will be correlated and the term with the correlation coefficient, ρ, can make a significant contribution to the error on a calculated value.

In the special case of the inverse  where

where  , the distribution is a Cauchy distribution and there is no definable variance. For such ratio distributions, there can be defined probabilities for intervals which can be defined either by Monte Carlo simulation, or, in some cases, by using the Geary-Hinkley transformation.[2]

, the distribution is a Cauchy distribution and there is no definable variance. For such ratio distributions, there can be defined probabilities for intervals which can be defined either by Monte Carlo simulation, or, in some cases, by using the Geary-Hinkley transformation.[2]

Example formulas

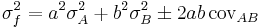

This table shows the variances of simple functions of the real variables  , with standard deviations

, with standard deviations  , correlation coefficient

, correlation coefficient  and precisely-known real-valued constants

and precisely-known real-valued constants  .

.

-

Function Variance

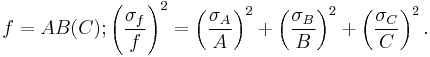

For uncorrelated variables the covariance terms are zero. Expressions for more complicated functions can be derived by combining simpler functions. For example, repeated multiplication, assuming no correlation gives,

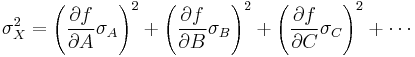

Partial derivatives

Given

-

Absolute Error Variance

[3]

[3]

Example calculation: Inverse tangent function

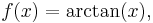

We can calculate the uncertainty propagation for the inverse tangent function as an example of using partial derivatives to propagate error.

Define

where  is the absolute uncertainty on our measurement of

is the absolute uncertainty on our measurement of  .

.

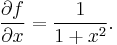

The partial derivative of  with respect to

with respect to  is

is

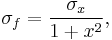

Therefore, our propagated uncertainty is

where  is the absolute propagated uncertainty.

is the absolute propagated uncertainty.

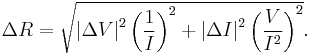

Example application: Resistance measurement

A practical application is an experiment in which one measures current, I, and voltage, V, on a resistor in order to determine the resistance, R, using Ohm's law,

Given the measured variables with uncertainties, I±ΔI and V±ΔV, the uncertainty in the computed quantity, ΔR is

See also

- Experimental uncertainty analysis

- Errors and residuals in statistics

- Accuracy and precision

- Delta method

- Significance arithmetic

- Automatic differentiation

- List of uncertainty propagation software

- Interval finite element

Notes

- ^ Leo Goodman (1960). "On the Exact Variance of Products". Journal of the American Statistical Association 55 (292): 708–713. doi:10.2307/2281592. JSTOR 2281592.

- ^ Jack Hayya, Donald Armstrong and Nicolas Gressis (July 1975). "A Note on the Ratio of Two Normally Distributed Variables". Management Science 21 (11): 1338–1341. doi:10.1287/mnsc.21.11.1338. JSTOR 2629897.

- ^ [|Vern Lindberg] (2009-10-05). "Uncertainties and Error Propagation" (in eng). Uncertainties, Graphing, and the Vernier Caliper. Rochester Institute of Technology. pp. 1. Archived from the original on 2004-11-12. http://web.archive.org/web/*/http://www.rit.edu/~uphysics/uncertainties/Uncertaintiespart2.html. Retrieved 2007-04-20. "The guiding principle in all cases is to consider the most pessimistic situation."

References

This has been a standard text used in undergraduate science and engineering for more than 40 years:

- Bevington, P.R. and Robinson, D.K. (2002) Data Reduction and Error Analysis for the Physical Sciences, 3rd Ed., McGraw-Hill ISBN 0071199268

This text has detailed propagation-of-error material:

- Meyer,S.L. (1975) Data Analysis for Scientists and Engineers, Wiley ISBN 0-471-59995-6

External links

- A detailed discussion of measurements and the propagation of uncertainty explaining the benefits of using error propagation formulas and monte carlo simulations instead of simple significance arithmetic.

- Uncertainties and Error Propagation, Vern Lindberg's Guide to Uncertainties and Error Propagation.

- GUM, Guide to the Expression of Uncertainty in Measurement

- EPFL An Introduction to Error Propagation, Derivation, Meaning and Examples of Cy = Fx Cx Fx'

- uncertainties package, a program/library for transparently performing calculations with uncertainties (and error correlations).